白皮书摘要:

《基于边缘云的交互式视频应用 / Interactive Applications Powered by Video at the Cloud Edge》

ASIC based video encoders deliver the TCO required for cloud gaming, RTC, and interactive video applications.

本篇白皮书介绍了视频流媒体的发展趋势以及基于专用高性能视频处理芯片能力的新兴边缘云体系结构。文中研究了流媒体应用程序服务端的技术和体系结构,并探讨了运行这些服务的经济性和可行性。

该白皮书分为三个主要章节,第一部分介绍了视频应用和市场的增长驱动力以及Tirias Research对流媒体发展趋势的预测;第二部分讨论前瞻性视频服务产业的体系结构所需注意的事项;第三部分讨论了运营成本,并提出了基于边缘服务器的视频服务的总体拥有成本模型,并对相关技术进行了比较。它扩展了Tirias Research先前的白皮书《移动云游戏的兴起(The Emergence of Cloud Mobile Gaming)》,该白皮书还阐述了由视频处理芯片ASIC和ARM架构服务器所驱动的新兴头戴式显示器和移动交互程序等新颖应用。

查看白皮书全文, 请点击:

Video & Interactive Media at the Cloud Edge

Few topics in technology can match the exposure of edge computing and its bold vision for the future. Placing compute resources closer to users and sensors achieves lower latency, allowing new, cloud-based interactive services to run with local applications’ responsiveness. In principle, consumer applications can span interactive social video, game streaming, virtual reality, and augmented reality experiences powered by servers that live at the network edge. Users engage apps just like they do today, but using an application service in the cloud. In the case of interactive applications – like games and social media or communications apps – video compression handles the packing and unpacking of an application’s visual output from the edge to the user’s screen. Since all the work is performed in servers at the edge, client devices can be cheap, low power, wearable, and mobile. Reducing the cost to deliver low latency is critical to emerging interactive edge services.

Operational costs are managed by placing application servers and video encoding systems together in regional/metropolitan data centers. In the same way, latency can be significantly reduced, and video quality increased by employing specialized chips – Applications-Specific Integrated Circuits (ASICS) – for video encoding. Using these specialized video encoders at local points of presence can achieve the latency required for cell-tower placement without the cost.

Advanced video CODECs must be combined with low-latency processing to deliver visual fidelity for interactive applications and collaborative social interaction. Dedicated video processing, including ASIC video encoders and core logic, can radically reduce the hardware required for video processing. And finally, placing compute at regional points of presence creates a balance between the vision of edge computing and the economics of operating consumer services at scale.

The Opportunity: Powering the Exponential Growth of Video

Video technology has demonstrated remarkable versatility, with the potential to transmit real-time, interactive experiences to ubiquitous devices like PCs, TVs, and smartphones. Achieving more fluid collaborative and interactive experiences require higher resolutions and framerates at lower latency. The challenge is operational cost, maintaining the quality of service, guaranteeing high visual quality, and reducing motion-to-photon latency. A new generation of dedicated video transcoders can reduce the compute requirement at the same quality by ten-times (10X) and performance per watt by twenty-times (20X), thus improving the video services’ viability.

NETINT solves the conundrum video services face when seeking to increase their user base economically while maintaining a high video quality experience. The company is a pioneer in ASIC based video encoding and has introduced a family of dedicated video processors that combine video encoding, solid-state storage, and machine learning. These processors radically reduce the server footprint required for interactive entertainment like gaming and mixed reality to scale delivery on desktop, mobile, or head-mounted display applications from the cloud edge.

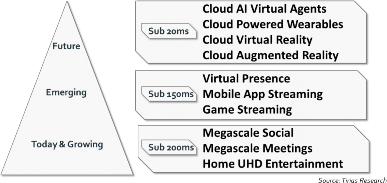

Forty Million Cloud Encoded HD Streams By 2025

The legacy of internet video is video on demand (VOD) – stored video delivered as entertainment or instructional content whenever the viewer requests the file. This content is uploaded, transcoded into multiple quality and resolution levels, and distributed (streamed) over the internet. Live streams require real-time transcoding for rapid distribution to users. These streams can include a full spectrum of interactivity, from mobile social streams to interactive applications. Cloud-based applications can include games and apps that originated on PCs or smartphone platforms, running in the cloud, encoded as video, and experienced like native applications by remote users. TIRIAS Research estimates that high-quality, high-definition cloud video streams will expand from 4 million encoded concurrent streams today to over 40 million by 2025.

Growth in social or user-generated video consumption has been powered mainly by mobile networks starting with 4G and now 5G connected devices. Emerging application streaming services require alignment of content providers, service providers, processing technology, and low latency network infrastructure.

Cloud Edge Architectures for Emerging Applications

Emerging applications and services require technology that can scale economically to many users without concern for resource constraints. Cloud streaming is extremely demanding, presenting a combination of technical challenges. Unlike a webpage where content comes from many locations, streamed video can be aggregated in the cloud and packaged for rapid delivery at the cloud edge. High video quality and low latency provide users with a sense that they are experiencing a local, native application.

Compute Density Creates Viable Edge System Architectures

Moving video processing to the edge requires a massive improvement in computational efficiency. Locating compute servers at the cloud edge implicates cell towers and local points of presence, removing distance as a network latency factor. The cloud edge in the broadest sense can describe small data centers operating at local points of presence or within cell tower base stations. Base station deployments are expensive and physical space is minimal, creating formidable hurdles for consumer and commercial services. Cloud servers’ placement in regional points of presence is the most viable model, and it allows a metropolitan area to be serviced by a single data center. The opportunity to tune the server environment and provide best in class systems architecture, networking, and operational efficiency allows high availability with a latency optimized solution.

The Total Operating Cost of Streaming

The major factors determining the operating cost of operating cloud or edge servers include the capital cost of acquiring the servers and their projected useful life, power consumption, cooling, and facilities.

In the case of edge computing, the cost to deploy servers increases as you get closer to the edge. Generally, placing servers at scale in base stations is the most expensive – space is almost always incredibly limited, and operational costs such as rent, site cooling, and maintenance can be high. Dedicated rooftop enclosures, remote locations, and tall office building locations boost the expense of adding on-premises edge servers.

The higher cost and space constraints of base station placement lead some to conclude that we must settle for “close to the base station” within regional presence points. Cloud servers’ placement in regional points of presence is the most viable model allowing a major metropolitan area to be serviced by a single data center.

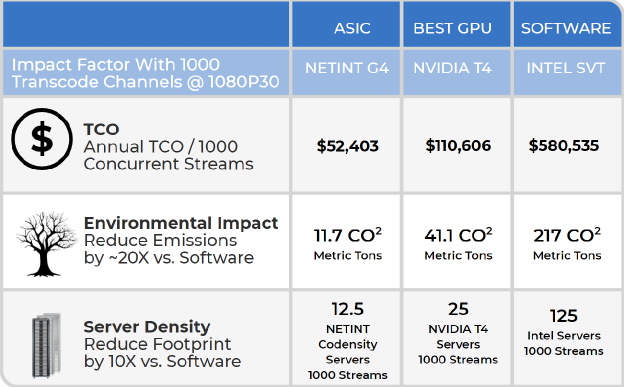

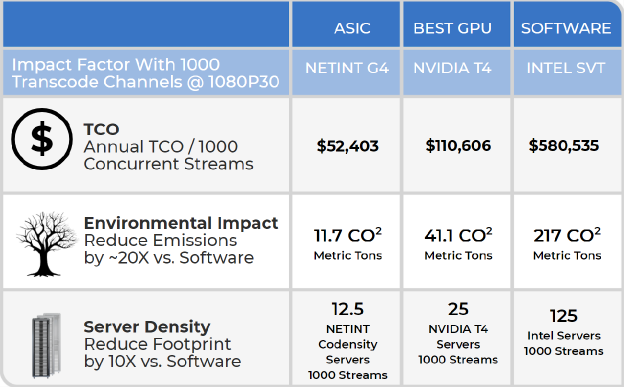

ASIC Encoding – Lowest TCO

The example below shows the operating cost for video encoding is $580,533 using a software-based solution and $110,606 using GPUs per one thousand 1080p30 channels running x265 Medium. With ASIC based encoders, we can significantly reduce encoding cost by a factor of ten to a total cost of ownership (TCO) of just $52,403. These calculations use $0.08 per kWh power costs and a 3X multiple to include cooling and facilities. Servers are priced and amortized over three years.

The Future

Edge deployment of interactive application services will require high density compute to minimize footprint, maximize reliability, and decrease operating costs. Applications at the cloud edge will rely heavily on video encoding technology and upgraded network technology, including 5G, to deliver these experiences to end-users with the lowest possible latency. As the network and video encoding improve, the cloud will become almost instantaneous, providing experiences to users that fool their senses and make remote applications seem like they are running locally on high-performance clients. Emerging ASIC based encoders are a breakthrough in density, performance, and cost, enabling edge applications to scale economically at the edge. By lowering latency, they allow real-time technologies, including machine learning and sensor data fusion, to create entirely new, intelligent, and interactive experiences.

For more information on NETINT edge encoders, visit www.netint.ca online. To read more on the cutting edge of video processing, edge computing, machine learning and more visit www.TIRIASresearch.com/research online.